As a digital humanities librarian, E. Leigh Bonds (The Ohio State University) undertook an institutional environmental scan as the basis for assessment, identifying gaps, and developing recommendations. In this post, Bonds details her approach and framework, which prompted conversations and coordination across campus.

In August 2016, I became The Ohio State University’s first Digital Humanities Librarian. I’d already been “the first” at another institution, so I was acutely aware that distinction is both a gift and a curse: on one hand, I have the opportunity to define the role; on the other, the responsibility of defining that role. More importantly, I knew “the first” typically has the task of mapping previously uncharted (or partially charted) territory—the scope of digital humanities on campus—and exactly one week into my new position, I received that first charge: conduct an environmental scan of DH at OSU.

Having never conducted a formal environmental scan before (or even witnessed someone else doing one), I turned to the literature: no one charged with such an undertaking—regardless of campus size—does so without consulting those who have already charted their own environments. From recent publications (see Works Consulted), I gleaned that the scan should determine the nature of DH work underway, researchers’ interests, researchers’ needs, existing resources, and gaps in resources. All of the information gathered would then be complied into a report—in my case, an internal report for the Libraries’ administration and the head of the research services department—that included recommendations based on the findings.

[pullquote]Environmental scans should determine the nature of DH work underway, researchers’ interests, researchers’ needs, existing resources, and gaps in resources.[/pullquote]

What follows is my strategy for making these determinations and framing my report. Rather than provide a one-size-fits-all template (which would most likely work for no one), I explain the process I followed—and the thinking behind that process—to guide other “firsts.”

Identifying Key Researchers

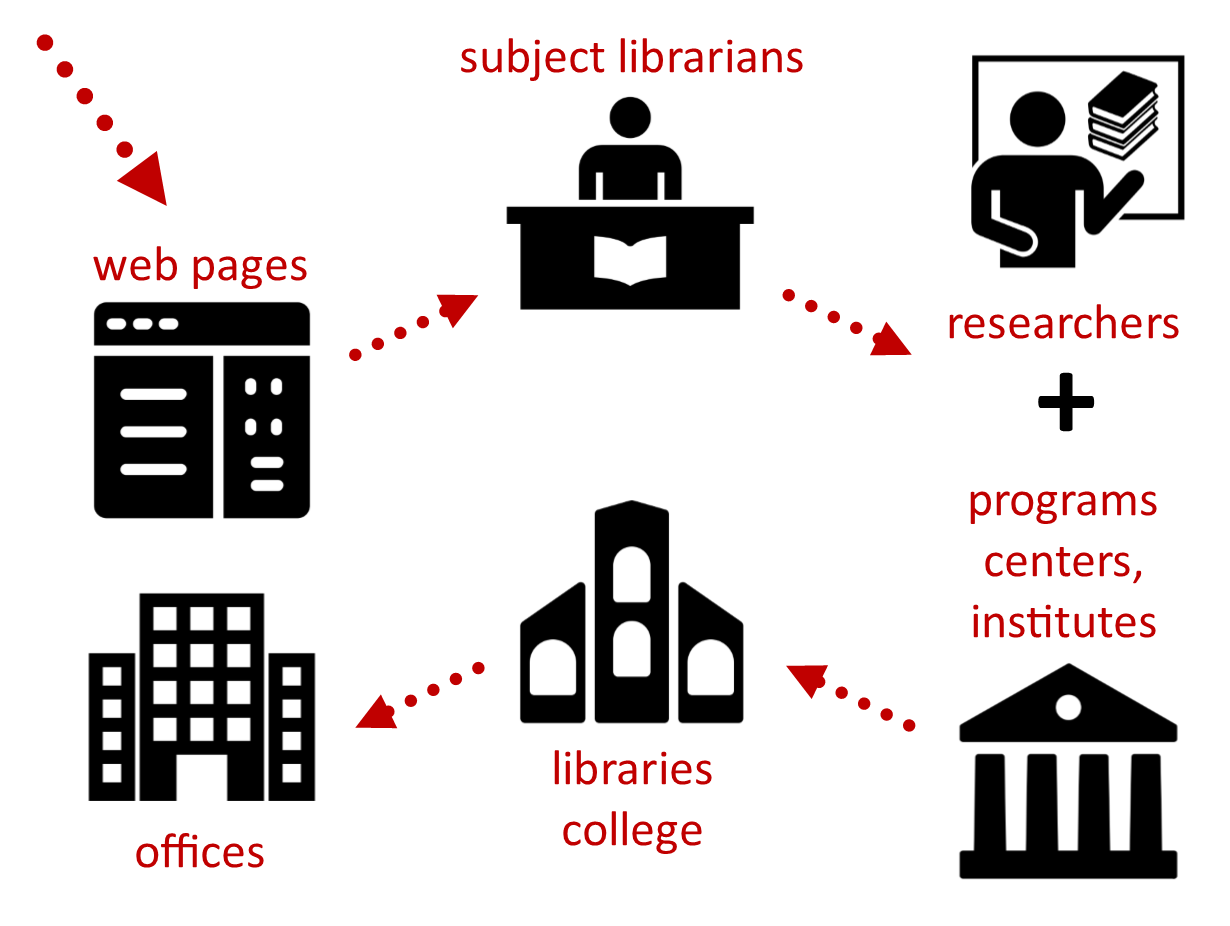

Determining who was doing DH and who was supporting that work seemed the logical place to start. I spent the first week scanning the twenty-three humanities and arts departments’ web pages, skimming every faculty page, and noting the names of those I needed to meet and the work they were doing or supporting. Unfortunately, very few identified with “digital humanities,” so I found myself delving into CVs, looking for DH-related keywords in publications and presentations (network, data, mapping, visualization, computational, digital archive, etc.).[1. Several months after my initial scan of faculty pages, I participated in the University of Illinois iSchool Spring Break Program and guided three MLIS students—Brett Fujioka, Tanner Lewey, and Abby Pennington—through a second scan of humanities faculty pages and CVs to identify faculty either using DH methods and tools or with research interests that lend themselves to their use.]

To validate my findings, I next met with the subject librarians for each of these departments. I shared the findings from my “distant scan”; they gave me further information about what I’d found and, in a few cases, about what I hadn’t found. Additionally, these meetings gave me the opportunity to talk with my new colleagues about DH and my role, and to gauge their understandings, interests, and experiences with DH work.

In the subsequent months, I met with the majority of the faculty on my list. I asked them about their interests, experiences, and ideas for both their research and for integrating digital humanities methods and tools into their courses; the resources they have and those they lack; their graduate students interested in this work; and about what they envision for DH on campus. From these conversations, I learned about the various digital methods and tools being used, and the resources available and still needed on campus. I also learned about the initiatives faculty had undertaken prior to my arrival on campus, like establishing a working group and proposing an undergraduate minor.

In addition, I scheduled appointments with the directors of the humanities- and arts-related programs, centers, and institutes on campus with affiliated faculty doing DH work. During these meetings, I asked them many of the same questions I asked the faculty, but I focused more on the forms of support they provide and the resources they have available for humanities research.

Identifying Support & Resources

As I met with the key researchers, I also began meeting with faculty and staff in several support units across campus. Within the Libraries, I found several staff supporting digital research projects in a variety of capacities: special collections, archives, digital imaging, digital initiatives, copyright, metadata, acquisitions, and application development. In meetings with each, we discussed their involvement in this work thus far, interests in continued involvement, and resources. In the College of Arts and Sciences Technology Services, I met with three department heads to learn more about the research consulting (computation and data storage), academic technology (media and eLearning resources), and application development services. From them, I learned the specifics of the services offered, who in the humanities and arts were using their services, the types of projects they’d been involved in, and, in the case of application development, what costs were involved. In the Office of Research, I met with the development specialist to discuss funding opportunities for DH work and a research integrity officer to discuss university policies governing intellectual property and data ownership. Not only was it important for me to learn about these matters myself, it was also important to learn to whom to send faculty to discuss funding opportunities and intellectual property matters as they arise.

Defining DH

[pullquote]I was less interested in labeling than I was in learning what researchers were doing or wanted to do, and what support they needed to do it. Ultimately, I viewed the environmental scan as the first step towards coordinating a community for researchers with DH interests.[/pullquote]At some point in nearly all of my conversations, the question “What is digital humanities?” was inevitably asked. Rather than tell, I preferred to show, so I explained DH in terms of the range of work being done by researchers across humanities and arts disciplines. I also clarified that, for the purposes of my environmental scan, I was less interested in labeling than I was in learning what researchers were doing or wanted to do, and what support they needed to do it. Ultimately, I viewed the environmental scan as the first step towards coordinating a community for researchers with DH interests.[2. Approaching DH from “the liminal position” of the Libraries, outside “of discipline or department,” as described by Patrik Svensson, I adopted the view of “the digital humanities as a meeting place, innovation hub, or trading zone,” which I see as essential for building the community I envision.]

For the report itself, however, I realized I had to tell in addition to show. To reflect “the breadth of DH work being produced at OSU,” I defined the digital humanities simply as “the application of digital or computational methods to humanities research.” I explained it “involves both the interpretive, critical analysis skills developed through humanities study and the technical skills required by the digital/computational method.” “For this reason,” I added, “digital humanities projects are often collaborative with each collaborator contributing a different skillset required by the critical or digital aspects of project.” I created a simple graphic to show “the extent of humanities analysis of content and context, and the variety of digital and computational methods currently being employed to conduct and/or disseminate that analysis.”

As Alan Liu remarked about his “Map of the Digital Humanities,” I never intended this to be “the last word.” Instead, I wanted it to be a catalyst for continuing conversations on our campus.

Tracking DH

Likewise, I wanted the history of DH on campus to raise awareness that researchers had been “doing DH” for quite some time—without an institute, center, program, or other form of structured support. For this section of the report, I opted to show rather than tell.

Admittedly, this timeline of initiatives and projects was not comprehensive; it did, however, reflect the key points that DH had been on campus since the early 2000s and the projects are increasing both in number and in complexity.

Framing the Report

As I began thinking of how to structure the report, I realized I needed a framework—and a clear set of terms—for discussing project requirements. Using the phases outlined by the National Endowment for the Humanities Office of Digital Humanities, I created a DH project lifecycle graphic to provide that framework:

And in the report, I explained the specific support and resource requirements for each stage, including consultations with experts in digital methods, content/project management, platforms/tools, and funding.

Existing Support & Resources

[pullquote]I wanted the history of DH on campus to raise awareness that researchers had been “doing DH” for quite some time—without an institute, center, program, or other form of structured support.[/pullquote]That framework then provided the structure for the subsequent discussion of existing support and resources related to specific project requirements:

- digital content

- content management and project management

- DH methods, platforms, and tools

- development, hosting, and curation

In each of these sections, I outlined who provides the support/resources on campus and off-site, the specific support/resources available on campus and off-site, whether costs are involved, and any conditions that apply to the support/resources.

The section about digital content focused on three common forms: content in older formats requiring digitization, content existing in digital formats, and content generated by the project. I listed the specific sources for each content form (e.g. researcher, collaborator(s), OSU Libraries, external libraries/museums, vendors, work for hire), and indicated whether any costs are involved or whether any conditions apply (e.g. limited format types, limited permissions). Due to the costs involved, I differentiated work for hire (e.g. students, staff, external services) from collaborator(s) as a source.

The discussion in the development, hosting, and curation section was further divided by whether a project has funding or not. In the infographic included in my report, the specific units on campus providing support in these areas were listed, and off-site was included as a resource option for hosting and curation. Again, I differentiated work for hire from collaborator(s) for cost reasons.

To highlight gaps, it was important to emphasize that some of the resources remain conditional (indicated by a dashed outline) even when a project is funded and some of the resources involve costs (indicated by the dollar sign) even when a project is not funded.

Surveying Key Researchers

Finally, I invited thirty-eight faculty identified during the scan to complete a survey about their experiences developing a DH project and about their needs (and the needs of their graduate students).[3. Ithaka S+R “Sustainability Implementation Toolkit” survey by Nancy Maron and Sarah Pickle guided the one I developed, particularly for the questions related to the support received for digital projects.] I asked those who’d developed a project to rate the level of support they received for specific stages of the project lifecycle—from refining project scope to development to maintenance—and to identify which units on campus provided that support. I asked about funding, collaborators, external support, and work for hire for past and current projects; and I asked everyone what support they would need to develop a future project. The twenty responses I received—a 52.6 response rate—validated and further supported the findings from my conversations.

Additionally, I asked what workshops/sessions would interest them and their grad students, grouping the possibilities into three categories:

- best practices—like project management and content/data management

- DH methods—like data scraping, spatial analysis, visualization

- tools/platforms/languages—like ArcGIS, Python, Scalar

These categories reflect the scaffolding I envision for DH programming with different sessions, workshops, and working groups building upon one another and becoming increasingly more specialized and project-specific.

Identifying Gaps & Making Recommendations

All of the information I gathered during my first eight months at OSU exposed seven foundational gaps related to infrastructure, development/design support, and learning opportunities. Of course, anyone reading the report could have surmised the list themselves: I’d pinpointed the project requirements for each stage, reviewed the existing support and resources, and conducted a survey of researchers using DH methods and tools (the results of which were included in an appendix).

As a result, the recommendations in the concluding “Future Directions” section prioritized addressing the gaps in the infrastructure—on which the majority of future initiatives rely—followed by providing learning opportunities for faculty and graduate students. I subdivided the section into recommendations for my role as the Digital Humanities Librarian; for the Research Commons as the research hub; for the Libraries as both DH producers and supporters; for a partnership between the Libraries and the College of Arts and Sciences; and for consortial partnerships. In making the recommendations, I took capacity and scalability into account, knowing that many of the infrastructural gaps—as well as researchers’ desires for academic programs and centralized support—could not be remedied by the Libraries alone. For the purposes of these recommendations, whether the Libraries or the College could or would meet these needs or fulfill researchers’ desires didn’t matter: I simply identified them as areas to address.

To show what others in the Big Ten Academic Alliance and other R1 institutions with established DH programs were doing, I included a spreadsheet in an appendix indicating whether, for these institutions:

- there is a DH center on campus

- the DH center is a partnership between the college and the library

- the library has its own center

- there is an undergraduate minor

- there are other undergraduate programs

- there is a graduate certificate program

- the campus is a Digital Humanities Summer Institute (DHSI) institutional partner

- the campus is a Reclaim Hosting Domain of One’s Own Institution

Not only did the spreadsheet provide a quick point of reference for who’s doing what, it also provided administrators with points of contact for further investigation.

Following Up

[pullquote]Rather than the culmination, the report was, in fact, the beginning—the first iteration in the environmental scanning process that will continue both formally and informally.[/pullquote]In May, I submitted my environmental scan report to the head of the research services department, who submitted it to the associate directors and the director. Over the course of the summer, the report was dispersed more widely within the Libraries, sparking a number of important conversations about workflows, scope, and scalable support structures. In addition, it directly informed the planning for workshops, learning sessions, and working groups through the Research Commons.

In the fall, conversations across campus ensued as I shared my environmental scan with faculty, institute/center/program directors, and technology services directors. In addition to partnering with colleagues to teach sessions on the top areas of interest identified by the survey, I held a “DH Forum” for faculty and graduate students to review the key findings in my environmental scan and “discuss ideas for advancing the digital humanities at OSU.”

As administrators and deans discuss funding and partnerships in the coming months, I’ll be consulting with researchers, collaborating on projects, and coordinating the DH program. Each of these points of contact provides opportunities to continue environmental scanning, to expand the campus support network, and to establish the community of researchers. Rather than the culmination, the report was, in fact, the beginning—the first iteration in the environmental scanning process that will continue both formally and informally for the remainder of my time as the first.

Recommended Readings

Anne, Kirk M., et al. “Building Capacity for Digital Humanities: A Framework for Institutional Planning.” EDUCAUSE, 31 May 2017. Accessed 28 Dec. 2017.

Brenner, Aaron. “Audit of ULS Support for Digital Scholarship.” University of Pittsburgh, Sep. 2014. Accessed 28 Dec. 2017.

Lewis, Vivian, Lisa Spiro, Xuemao Wang, and Jon E. Cawthorne. “Building Expertise to Support Digital Scholarship: A Global Perspective.” Council on Library and Information Resources, Oct. 2015. Accessed 28 Dec. 2017.

Lindquist, Thea, Holley Long, and Alexander Watkins. “Designing a Digital Humanities Strategy Using Data-Driven Assessment Methods.” dh+lib, 30 Jan. 2015. Accessed 28 Dec. 2017.

Lippincott, Joan K. and Diane Goldenberg-Hart. “Digital Scholarship Centers: Trends & Good Practice.” Coalition for Networked Information, Dec. 2014.

Maron, Nancy L. and Sarah Pickle. “Sustainability Implementation Toolkit: Developing an Institutional Strategy for Supporting Digital Humanities Resources.” Ithaka S+R, 18 June 2014. Accessed 28 Dec. 2017.

Rosenblum, Brian and Arienne Dwyer. “Copiloting a Digital Humanities Center: A Critical Reflection on Libraries-Academic Partnership.” Laying the Foundation: Digital Humanities in Academic Libraries, 15 Mar. 2016, pp. 111-26. Accessed 28 Dec. 2017.

One thought on “First Things First: Conducting an Environmental Scan”

Comments are closed.